Wednesday, April 22, 2009

Exact Globe and Exact Synergy supports SQL Server 2008 as of product update 393.

As of 21 april 2009 product update 393 for Exact Globe and Exact Synergy is generally available for all customers worldwide with an active maintenance agreement, except for customers with custom solutions .

As of product update 393 both Exact Globe and Exact Synergy supports the use of SQL Server 2008. This means that Exact starts selling this new version of SQL Server. Please contact your local Exact office or reseller. If you want to have a look at the unique selling points of SQL Server 2008, please have a look at the overview on the Microsoft website. For an overview of technical documentation for SQL Server 2008, please refer to the Books Online.

SQL Server 2008 licenses grant customers the right to downgrade, which means that customers wanting to use SQL Server 2005 can still do so when purchasing SQL Server 2008 licenses. The upgrade to SQL Server 2008 can then take place at their own convenience. This is also important to know for customers purchasing or using Exact Synergy Enterprise: they are adviced to purchase SQL Server 2008 licenses and use SQL Server 2005 until product update 241 of Exact Synergy Enterprise, which suports SQL Server 2008, is available.

Tuesday, April 21, 2009

Exact System Information application: Why should you use it?

This is my third blog post about the Exact System Information application. In the first 2 blogposts I introduced the reason of the Exact System Information application and what kind of information is retrieved by the Exact System Information application. In this blogpost I will explain why you as valuable customer should start this application on your SQL Server.

With the information we received from your Exact databases, we are able to improve our software. However improvements in the software based on this data will cost time and you will not directly benefit of it.

So the question is: What kind of improvements are directly possible for you to implement?

I will give some examples to:

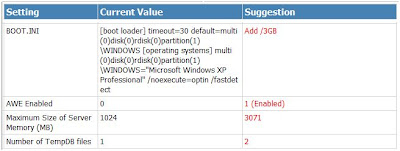

SQL Server configuration improvements:

Example: Database configuration improvement scripts:

Example: Not used Exact functionality: Clean up historical journal records to save database size.

Thank you already for your cooperation and trust in Exact Software.

With the information we received from your Exact databases, we are able to improve our software. However improvements in the software based on this data will cost time and you will not directly benefit of it.

So the question is: What kind of improvements are directly possible for you to implement?

I will give some examples to:

SQL Server configuration improvements:

Example: Database configuration improvement scripts:

Example: Not used Exact functionality: Clean up historical journal records to save database size.

These are some examples. To experience all possible improvements, please start the application on your SQL server. The application is available on : ftp://ftp.exact.nl/Software/ESI/ExactSysInfo.zip. Extract the zip file on your SQL server and start the ExactSysInfo.exe

After we have received your data we will analyze the data. Via the support department you can request the improvement report. In the future we will distribute this improvement report via our portals.Thank you already for your cooperation and trust in Exact Software.

Labels:

Exact System Information,

improvements,

performance,

sql

Wednesday, April 15, 2009

Troubleshooting Performance Problems in SQL Server 2008

Microsoft has published an interesting article (97 pages) about troubleshooting Performance Problems in SQL Server 2008. This is a must to read for people interesting in performance analyzing and optimization of your SQL Server. It provide information to find:

- Resource bottlenecks

- CPU bottlenecks

- Memory bottlenecks

- I/O bottlenecks

- Tempdb issues

- Slow Running Queries.

Enjoy it.

Labels:

2008,

performance,

server,

sql,

troubelshooting

Tuesday, April 7, 2009

SQL Server maintenance on your SQL databases to improve performance.

Image credit: Gopal1035

Image credit: Gopal1035Introduction:

Performance of applications on SQL Server depends on different factors. This can be hardware configuration, hardware capacity, application problems, SQL server configuration and maintenance on SQL Server database. See for instance documents:

This document will describe what kind of database maintenance activities can be done to get better performance of your SQL database. Be aware that this will contribute to a better performing system, but this will not the solution for all performance problems.

Goal:

Better performance of the database with the same hardware and software.

Solution:

To get better performance of your SQL database next maintenance activities can be executed.

- Defragmentation of clustered indexes

- Update statistics of most important tables with full scan

- Defragmentation indexes

Ad 1) Defragmentation of clustered indexes.

How to:

A clustered index indicates the order in which table data should be physically stored. The table data is sorted and stored according to the key column or columns specified for the clustered index. Because the data is physically stored in a specific order, you can create only one clustered index per table. For instance the table GBKMUT of Exact Globe or Exact Synergy Enterprise has a clustered index on the field ID. All records will be physically stored in the order of ID. New records will be added at the end of the table. All data rows are stored in data pages. One data page contains multiple data rows. When GBKMUT records are deleted, for instance MRP records, you will get caps between the ID’s. A part of the data page to which the deleted GBKMUT records belongs is now empty. This is called fragmentation of the clustered index. This will result in more reads to retrieve the same amount of data. With next command you can retrieve the fragmentation percentage of a clustered index:

dbcc showcontig ('<tablename>', 1)

Example for table GBKMUT

dbcc showcontig ('GBKMUT', 1)

When executing dbcc showcontig ('GBKMUT', 1) next results are displayed.

DBCC SHOWCONTIG scanning 'gbkmut' table...

Table: 'gbkmut' (1019866700); index ID: 1, database ID: 5

TABLE level scan performed.

- Pages Scanned................................: 3037060

- Extents Scanned..............................: 385999

- Extent Switches..............................: 535838

- Avg. Pages per Extent........................: 7.9

- Scan Density [Best Count:Actual Count].......: 70.85% [379633:535839]

- Logical Scan Fragmentation ..................: 3.53%

- Extent Scan Fragmentation ...................: 37.17%

- Avg. Bytes Free per Page.....................: 612.5

- Avg. Page Density (full).....................: 92.43%

DBCC execution completed. If DBCC printed error messages, contact your system administrator.

The most optimal situation is a table with a scan density of 100 %. In this example 30 % of the pages are not used. To optimize the scan density use next command for a unique clustered index:

CREATE UNIQUE CLUSTERED INDEX <Clustered index name> ON <tablename> (<fields) with drop_existing

To optimize the scan density use next command for a non unique clustered index:

CREATE CLUSTERED INDEX <Clustered index name> ON <tablename> (<fields) with drop_existing

Example for table GBKMUT batch 360 which has a unique clustered index.

CREATE UNIQUE CLUSTERED INDEX PK_gbkmut ON gbkmut (ID) with drop_existing

When:

Not scheduled. Check can be executed on a weekly or monthly basis. Rebuild should be done when the specific table is not used by other users. Therefore a repair can’t be scheduled on a regular basis.

Ad 2) Update statistics of most important tables with full scan

How to:

Statistics are the basis for the SQL optimizer to generate the most optimal query plan. By default statistics are updated automatically. These statistics are updated by using a sample of the data. This is correct but sometimes it can happen that the default sample rate is too small. In these cases an update of the statistics should be execute.

Statistics can be updated with next command:

UPDATE STATISTICS <Table name> WITH FULLSCAN

To update table gbkmut with a fullscan use next command:

UPDATE STATISTICS GBKMUT WITH FULLSCAN

Depending on the amount of indexes and the amount of records this can take a while.

When:

Updating statistics of a table can be done while the table is used by other people. However it will have a negative impact on performance because all records in the table are retrieved. Therefore this can be scheduled on a daily or weekly basis when the use of the system is minimal. For instance during the night or during the weekend.

Ad 3) Defragmentation of indexes.

During the use of a table (Update, insert and deletes) fragmentation of data and indexes can occur. This will result in more reads to retrieve the same amount of data.

How to:

With next script all indexes of all tables in the database with a fragmentation > 30 % will be optimized.

/*Perform a 'USE <database name>' to select the database in which to run the script.*/

-- Declare variables

SET NOCOUNT ON

DECLARE @tablename VARCHAR (128)

DECLARE @Query NVARCHAR(255)

DECLARE @IxName VARCHAR (255)

DECLARE @IxId Int

DECLARE @ObjId Int

DECLARE @ScanDensity DECIMAL

DECLARE @maxfrag DECIMAL

-- Decide on the mimimum fragmentation to allow

SELECT @maxfrag = 70.0

-- Declare cursor

DECLARE tables CURSOR FOR

SELECT Name FROM sysobjects where xtype='U'

-- Create the table

CREATE TABLE #fraglist (

ObjectName CHAR (255),

ObjectId INT,

IndexName CHAR (255),

IndexId INT,

Lvl INT,

CountPages INT,

CountRows INT,

MinRecSize INT,

MaxRecSize INT,

AvgRecSize INT,

ForRecCount INT,

Extents INT,

ExtentSwitches INT,

AvgFreeBytes INT,

AvgPageDensity INT,

ScanDensity DECIMAL,

BestCount INT,

ActualCount INT,

LogicalFrag DECIMAL,

ExtentFrag DECIMAL)

-- Open the cursor

OPEN tables

-- Loop through all the tables in the database

FETCH NEXT

FROM tables

INTO @tablename

WHILE @@FETCH_STATUS = 0

BEGIN

-- Do the showcontig of all indexes of the table

INSERT INTO #fraglist

EXEC ('DBCC SHOWCONTIG (''' + @tablename + ''')

WITH FAST, TABLERESULTS, ALL_INDEXES, NO_INFOMSGS')

FETCH NEXT

FROM tables

INTO @tablename

END

-- Close and deallocate the cursor

CLOSE tables

DEALLOCATE tables

-- Declare cursor for list of indexes to be defragged

DECLARE indexes CURSOR FOR

SELECT ObjectName, ObjectId, IndexName, IndexId, ScanDensity

FROM #fraglist

WHERE ScanDensity <= @maxfrag

AND INDEXPROPERTY (ObjectId, IndexName, 'IndexDepth') > 0

-- Open the cursor

OPEN indexes

-- loop through the indexes

FETCH NEXT

FROM indexes

INTO @TableName, @ObjID, @IxName, @IxId, @ScanDensity

WHILE @@FETCH_STATUS = 0

BEGIN

set @Query = 'DBCC IndexDefrag (0,' + rtrim(@tablename) +','+ rtrim(@IxName) + ') with no_infomsgs --Current ScanDensity = ' + rtrim(@ScanDensity)

print @Query

EXEC sp_executesql @Query

FETCH NEXT

FROM indexes

INTO @TableName, @ObjID, @IxName, @IxId, @ScanDensity

END

CLOSE indexes

DEALLOCATE indexes

-- Delete the temporary table

DROP TABLE #fraglist

GO

DECLARE @tablename VARCHAR (128)

DECLARE @Query NVARCHAR(255)

DECLARE @IxName VARCHAR (255)

DECLARE @IxId Int

DECLARE @ObjId Int

DECLARE @ScanDensity DECIMAL

DECLARE @maxfrag DECIMAL

-- Decide on the mimimum fragmentation to allow

SELECT @maxfrag = 70.0

-- Declare cursor

DECLARE tables CURSOR FOR

SELECT Name FROM sysobjects where xtype='U'

-- Create the table

CREATE TABLE #fraglist (

ObjectName CHAR (255),

ObjectId INT,

IndexName CHAR (255),

IndexId INT,

Lvl INT,

CountPages INT,

CountRows INT,

MinRecSize INT,

MaxRecSize INT,

AvgRecSize INT,

ForRecCount INT,

Extents INT,

ExtentSwitches INT,

AvgFreeBytes INT,

AvgPageDensity INT,

ScanDensity DECIMAL,

BestCount INT,

ActualCount INT,

LogicalFrag DECIMAL,

ExtentFrag DECIMAL)

-- Open the cursor

OPEN tables

-- Loop through all the tables in the database

FETCH NEXT

FROM tables

INTO @tablename

WHILE @@FETCH_STATUS = 0

BEGIN

-- Do the showcontig of all indexes of the table

INSERT INTO #fraglist

EXEC ('DBCC SHOWCONTIG (''' + @tablename + ''')

WITH FAST, TABLERESULTS, ALL_INDEXES, NO_INFOMSGS')

FETCH NEXT

FROM tables

INTO @tablename

END

-- Close and deallocate the cursor

CLOSE tables

DEALLOCATE tables

-- Declare cursor for list of indexes to be defragged

DECLARE indexes CURSOR FOR

SELECT ObjectName, ObjectId, IndexName, IndexId, ScanDensity

FROM #fraglist

WHERE ScanDensity <= @maxfrag

AND INDEXPROPERTY (ObjectId, IndexName, 'IndexDepth') > 0

-- Open the cursor

OPEN indexes

-- loop through the indexes

FETCH NEXT

FROM indexes

INTO @TableName, @ObjID, @IxName, @IxId, @ScanDensity

WHILE @@FETCH_STATUS = 0

BEGIN

set @Query = 'DBCC IndexDefrag (0,' + rtrim(@tablename) +','+ rtrim(@IxName) + ') with no_infomsgs --Current ScanDensity = ' + rtrim(@ScanDensity)

print @Query

EXEC sp_executesql @Query

FETCH NEXT

FROM indexes

INTO @TableName, @ObjID, @IxName, @IxId, @ScanDensity

END

CLOSE indexes

DEALLOCATE indexes

-- Delete the temporary table

DROP TABLE #fraglist

GO

When:

Defragmentation of indexes can be done while the table is used by other people. However it will have a negative impact on performance because all indexes in the table are retrieved and updated. Therefore this can be scheduled on a weekly basis when the use of the system is minimal. For instance during the weekend.

Conclusion:

However SQL Server is largely self-configuring, self-tuning, and self-managing some maintenance is still necessary to get better performance. Defragmentation of clustered indexes and Update statistics of most important tables with full scan should be done manually if necessary. Defragmentation of indexes should be done on a weekly basis and can be scheduled.

Labels:

index,

maintenance,

performance,

sql

Subscribe to:

Posts (Atom)